Blog posts are like buses sometimes...

I've spent time over the last month enabling Blackwell support on NVK, the Mesa vulkan driver for NVIDIA GPUs. Faith from Collabora, the NVK maintainer has cleaned up and merged all the major pieces of this work and landed them into mesa this week. Mesa 25.2 should ship with a functioning NVK on blackwell. The code currently in mesa main passes all tests in the Vulkan CTS.

Quick summary of the major fun points:

Ben @ NVIDIA had done the initial kernel bringup in to r570 firmware in the nouveau driver. I worked with Ben on solidifying that work and ironing out a bunch of memory leaks and regressions that snuck in.

Once the kernel was stable, there were a number of differences between Ada and Blackwell that needed to be resolved. Thanks to Faith, Mel and Mohamed for their help, and NVIDIA for providing headers and other info.

I did most of the work on a GB203 laptop and a desktop 5080.

1. Instruction encoding: a bunch of instructions changed how they were encoded. Mel helped sort out most of those early on.

2. Compute/QMD: the QMD which is used to launch compute shaders, has a new encoding. NVIDIA released the official QMD headers which made this easier in the end.

3. Texture headers: texture headers were encoded different from Hopper on, so we had to use new NVIDIA headers to encode those properly

4. Depth/Stencil: NVIDIA added support for separate d/s planes and this also has some knock on effects on surface layouts.

5. Surface layout changes. NVIDIA attaches a memory kind to memory allocations, due to changes in Blackwell, they now use a generic kind for all allocations. You now longer know the internal bpp dependent layout of the surfaces. This means changes to the dma-copy engine to provide that info. This means we have some modifier changes to cook with NVIDIA over the next few weeks at least for 8/16 bpp surfaces. Mohamed helped get this work and host image copy support done.

6. One thing we haven't merged is bound texture support. Currently blackwell is using bindless textures which might be a little slower. Due to changes in the texture instruction encoding, you have to load texture handles to intermediate uniform registers before using them as bound handles. This causes a lot of fun with flow control and when you can spill uniform registers. I've written a few efforts at using bound textures, so we understand how to use them, just have some compiler issues to maybe get it across the line.

7. Proper instruction scheduling isn't landed yet. I have a spreadsheet with all the figures, and I started typing, so will try and get that into an MR before I take some holidays.

I should have mentioned this here a week ago. The Vulkan AV1 encode extension has been out for a while, and I'd done the initial work on enabling it with radv on AMD GPUs. I then left it in a branch, which Benjamin from AMD picked up and fixed a bunch of bugs, and then we both got distracted. I realised when doing VP9 that it hasn't landed, so did a bit of cleanup. Then David from AMD picked it up and carried it over the last mile and it got merged last week.

So radv on supported hw now supports all vulkan decode/encode formats currently available.

It’s hot out. I know this because Big Triangle allowed me a peek through my three-sided window for good behavior, and all the pixels were red. Sure am glad I’m inside.

Today’s a new day in a new month, which means it’s time to talk about new GL stuff. I’m allowed to do that once in a while, even though GL stuff is never actually new. In this post we’re going to be looking at GL_NV_timeline_semaphore, an extension everyone has definitely heard of.

Mesa has supported GL_EXT_external_objects for a long while, and it’s no exaggeration to say that this is the reference implementation: there are no proprietary drivers of which I’m aware that can pass the super-strict piglit tests we’ve accumulated over the years. Yes, that includes Green Triangle. Also Red Triangle, but we knew that already–it’s in the name.

This MR adds support for importing and using Vulkan timeline semaphores into GL, which further improves interop-reliant workflows by eliminating binary semaphore requirements. Zink supports it anywhere that additionally supports VK_KHR_timeline_semaphore, which is to say that any platform capable of supporting the base external objects spec will also support this.

For testing, we get to have even more fun with the industry-standard ping-pong test originally contributed by @gfxstrand. This verifies that timeline operations function as expected on every side of the API divide.

Next up: more optimizations. How fast is too fast?

Debian uses LDAP for storing information about users, hosts and other objects. The wrapping around this is called userdir-ldap, or ud-ldap for short. It provides a mail gateway, web UI and a couple of schemas for different object types.

Back in late 2018 and early 2019, we (DSA) removed support for ISO5218 in userdir-ldap, and removed the corresponding data. This made some people upset, since they were using that information, as imprecise as it was, to infer people’s pronouns. ISO5218 has four values for sex, unknown, male, female and N/A. This might have been acceptable when the standard was new (in 1976), but it wasn’t acceptable any longer in 2018.

A couple of days ago, I finally got around to adding support to userdir-ldap to let people specify their pronouns. As it should be, it’s a free-form text field. (We don’t have localised fields in LDAP, so it probably makes sense for people to put the English version of their pronouns there, but the software does not try to control that.)

So far, it’s only exposed through the LDAP gateway, not in the web UI.

If you’re a Debian developer, you can set your pronouns using

echo "pronouns: he/him" | gpg --clearsign | mail changes@db.debian.org

I see that four people have already done so in the time I’ve taken to write this post.

The DRM GPU scheduler is a shared Direct Rendering Manager (DRM) Linux Kernel level component used by a number of GPU drivers for managing job submissions from multiple rendering contexts to the hardware. Some of the basic functions it can provide are dependency resolving, timeout detection, and most importantly for this article, scheduling algorithms whose essential purpose is picking the next queued unit of work to execute once there is capacity on the GPU.

Different kernel drivers use the scheduler in slightly different ways - some simply need the dependency resolving and timeout detection part, while the actual scheduling happens in the proprietary firmware, while others rely on the scheduler’s algorithms for choosing what to run next. The latter ones is what the work described here is suggesting to improve.

More details about the other functionality provided by the scheduler, including some low level implementation details, are available in the generated kernel documentation repository[1].

Three DRM scheduler data structures (or objects) are relevant for this topic: the scheduler, scheduling entities and jobs.

First we have a scheduler itself, which usually corresponds with some hardware unit which can execute certain types of work. For example, the render engine can often be single hardware instance in a GPU and needs arbitration for multiple clients to be able to use it simultaneously.

Then there are scheduling entities, or in short entities, which broadly speaking correspond with userspace rendering contexts. Typically when an userspace client opens a render node, one such rendering context is created. Some drivers also allow userspace to create multiple contexts per open file.

Finally there are jobs which represent units of work submitted from userspace into the kernel. These are typically created as a result of userspace doing an ioctl(2) operation, which are specific to the driver in question.

Jobs are usually associated with entities and entities are then executed by schedulers. Each scheduler instance will have a list of runnable entities (entities with least one queued job) and when the GPU is available to execute something it will need to pick one of them.

Typically every userspace client will submit at least one such job per rendered frame and the desktop compositor may issue one or more to render the final screen image. Hence, on a busy graphical desktop, we can find dozens of active entities submitting multiple GPU jobs, sixty or more times per second.

In order to select the next entity to run, the scheduler defaults to the First In First Out (FIFO) mode of operation where selection criteria is the job submit time.

The FIFO algorithm in general has some well known disadvantages around the areas of fairness and latency, and also because selection criteria is based on job submit time, it couples the selection with the CPU scheduler, which is also not desirable because it creates an artifical coupling between different schedulers, different sets of tasks (CPU processes and GPU tasks), and different hardware blocks.

This is further amplified by the lack of guarantee that clients are submitting jobs with equal pacing (not all clients may be synchronised to the display refresh rate, or not all may be able to maintain it), the fact their per frame submissions may consist of unequal number of jobs, and last but not least the lack of preemption support. The latter is true both for the DRM scheduler itself, but also for many GPUs in their hardware capabilities.

Apart from uneven GPU time distribution, the end result of the FIFO algorithm picking the sub-optimal entity can be dropped frames and choppy rendering.

Apart from the default FIFO scheduling algorithm, the scheduler also implements the round-robin (RR) strategy, which can be selected as an alternative at kernel boot time via a kernel argument. Round-robin, however, suffers from its own set of problems.

Whereas round-robin is typically considered a fair algorithm when used in systems with preemption support and ability to assign fixed execution quanta, in the context of GPU scheduling this fairness property does not hold. Here quanta are defined by userspace job submissions and, as mentioned before, the number of submitted jobs per rendered frame can also differ between different clients.

The final result can again be unfair distribution of GPU time and missed deadlines.

In fact, round-robin was the initial and only algorithm until FIFO was added to resolve some of these issue. More can be read in the relevant kernel commit. [2]

Another issue in the current scheduler design are the priority queues and the strict priority order execution.

Priority queues serve the purpose of implementing support for entity priority, which usually maps to userspace constructs such as VK_EXT_global_priority and similar. If we look at the wording for this specific Vulkan extension, it is described like this[3]:

The driver implementation *will attempt* to skew hardware resource allocation in favour of the higher-priority task. Therefore, higher-priority work *may retain similar* latency and throughput characteristics even if the system is congested with lower priority work.

As emphasised, the wording is giving implementations leeway to not be entirely strict, while the current scheduler implementation only executes lower priorities when the higher priority queues are all empty. This over strictness can lead to complete starvation of the lower priorities.

To solve both the issue of the weak scheduling algorithm and the issue of priority starvation we tried an algorithm inspired by the Linux kernel’s original Completely Fair Scheduler (CFS)[4].

With this algorithm the next entity to run will be the one with least virtual GPU time spent so far, where virtual GPU time is calculated from the the real GPU time scaled by a factor based on the entity priority.

Since the scheduler already manages a rbtree[5] of entities, sorted by the job submit timestamp, we were able to simply replace that timestamp with the calculated virtual GPU time.

When an entity has nothing more to run it gets removed from the tree and we store the delta between its virtual GPU time and the top of the queue. And when the entity re-enters the tree with a fresh submission, this delta is used to give it a new relative position considering the current head of the queue.

Because the scheduler does not currently track GPU time spent per entity this is something that we needed to add to make this possible. It however did not pose a significant challenge, apart having a slight weakness with the up to date utilisation potentially lagging slightly behind the actual numbers due some DRM scheduler internal design choices. But that is a different and wider topic which is out of the intended scope for this write-up.

The virtual GPU time selection criteria largely decouples the scheduling decisions from job submission times, to an extent from submission patterns too, and allows for more fair GPU time distribution. With a caveat that it is still not entirely fair because, as mentioned before, neither the DRM scheduler nor many GPUs support preemption, which would be required for more fairness.

Because priority is now consolidated into a single entity selection criteria we were also able to remove the per priority queues and eliminate priority based starvation. All entities are now in a single run queue, sorted by the virtual GPU time, and the relative distribution of GPU time between entities of different priorities is controlled by the scaling factor which converts the real GPU time into virtual GPU time.

Another benefit of being able to remove per priority run queues is a code base simplification. Going further than that, if we are able to establish that the fair scheduling algorithm has no regressions compared to FIFO and RR, we can also remove those two which further consolidates the scheduler. So far no regressions have indeed been identified.

As an first example we set up three demanding graphical clients, one of which was set to run with low priority (VK_QUEUE_GLOBAL_PRIORITY_LOW_EXT).

One client is the Unigine Heaven benchmark[6] which is simulating a game, while the other two are two instances of the deferredmultisampling Vulkan demo from Sascha Willems[7], modified to support running with the user specified global priority. Those two are simulating very heavy GPU load running simultaneouosly with the game.

All tests are run on a Valve Steam Deck OLED with an AMD integrated GPU.

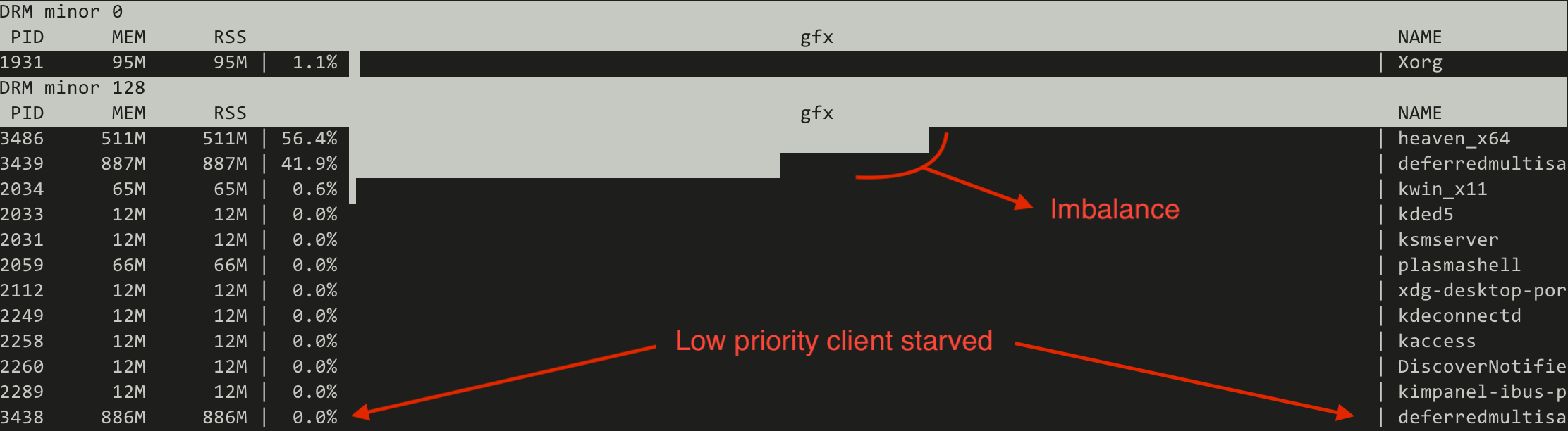

First we try the current FIFO based scheduler and we monitor the GPU utilisation using the gputop[8] tool. We can observe two things:

Switching to the CFS inspired (fair) scheduler the situation changes drastically:

Note that the absolute numbers are not static but represent a trend.

This proves that the new algorithm can make the low priority useful for running heavy GPU tasks in the background, similar to what can be done on the CPU side of things using the nice(1) process priorities.

Apart from experimenting with real world workloads, another functionality we implemented in the scope of this work is a collection of simulated workloads implemented as kernel unit tests based on the recently merged DRM scheduler mock scheduler unit test framework[9][10]. The idea behind those is to make it easy for developers to check for scheduling regressions when modifying the code, without the need to set up sometimes complicated testing environments.

Let us look at a few examples on how the new scheduler compares with FIFO when using those simulated workloads.

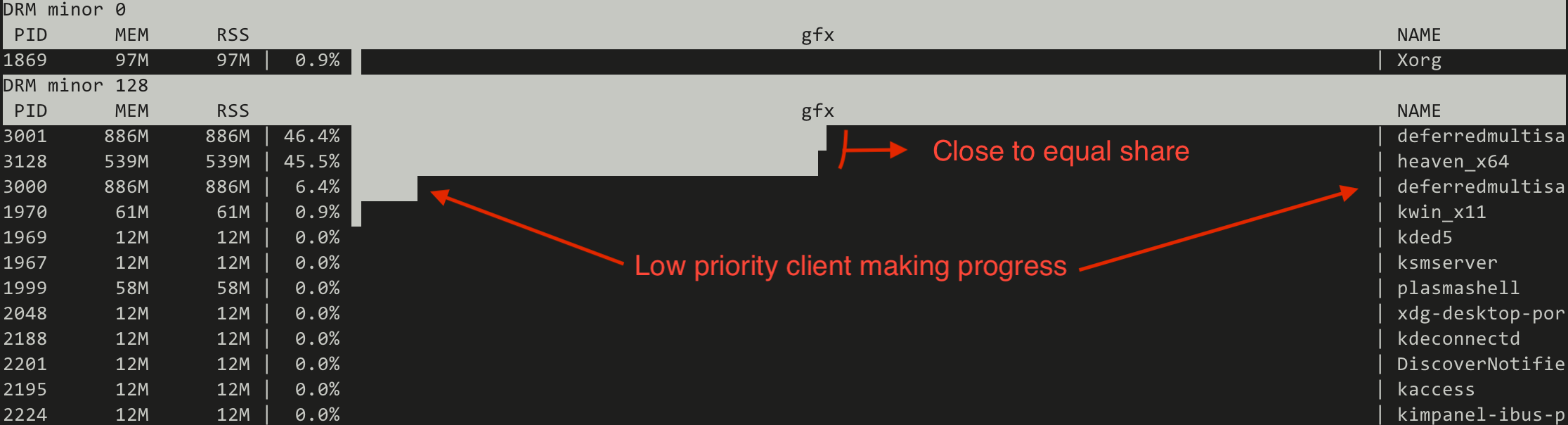

First an easy, albeit exaggerated, illustration of priority starvation improvements.

Here we have a normal priority client and a low priority client submitting many jobs asynchronously (only waiting for the submission to finish after having submitted the last job). We look at the number of outstanding jobs (queue depth - qd) on the Y axis and the passage of time on the X axis. With the FIFO scheduler (blue) we see that the low priority client is not making any progress whatsoever, all until the all submission of the normal client have been completed. Switching to the CFS inspired scheduler (red) this improves dramatically and we can see the low priority client making slow but steady progress from the start.

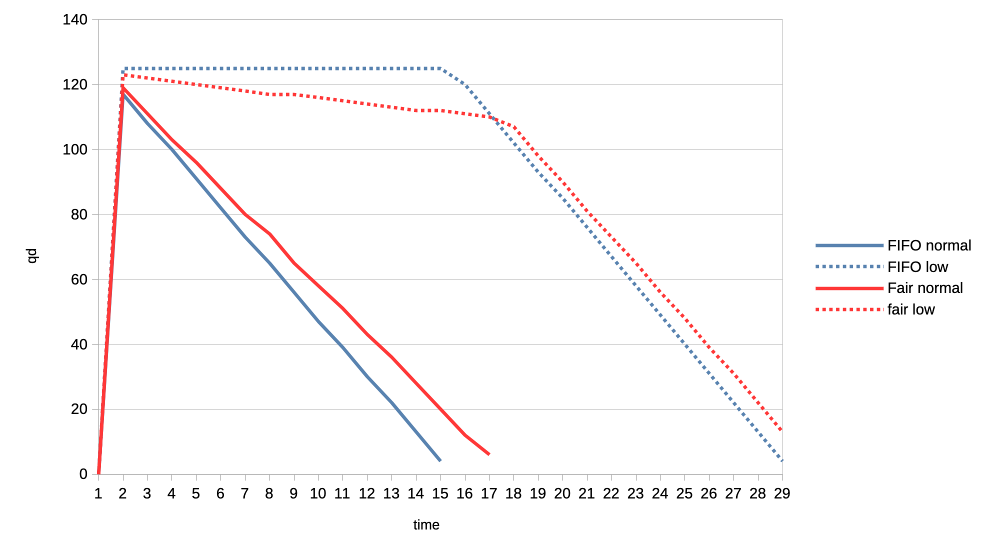

Second example is about fairness where two clients are of equal priority:

Here the interesting observation is that the new scheduler graphed lines are much more straight. This means that the GPU time distribution is more equal, or fair, because the selection criteria is decoupled from the job submission time but based on each client’s GPU time utilisation.

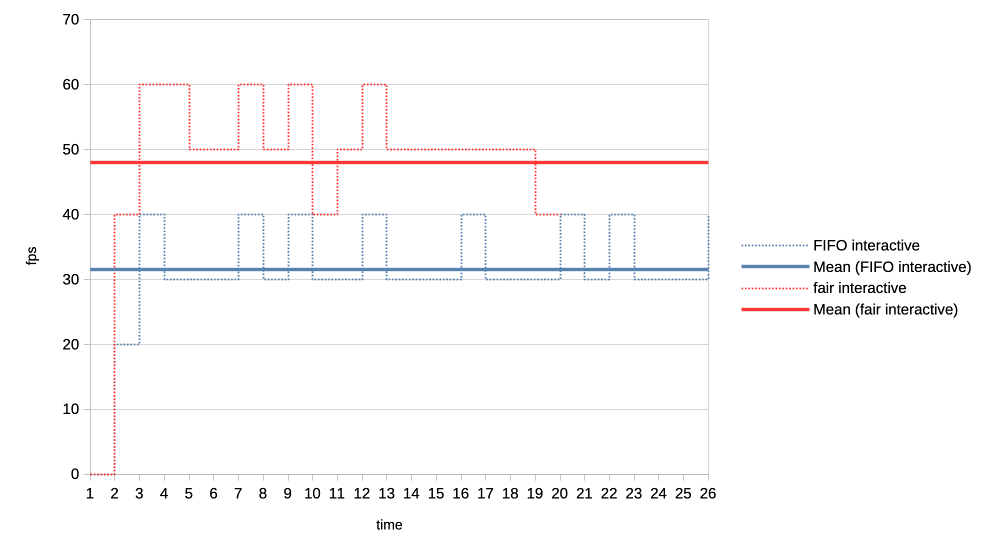

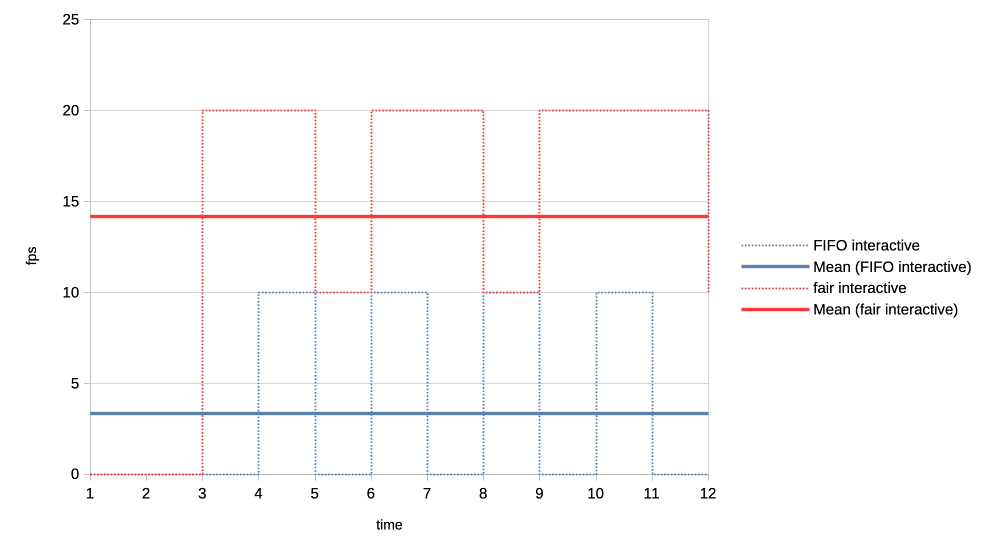

For the final set of test workloads we will look at the rate of progress (aka frames per second, or fps) between different clients.

In both cases we have one client representing a heavy graphical load, and one representing an interactive, lightweight client. They are running in parallel but we will only look at the interactive client in the graphs. Because the goal is to look at what frame rate the interactive client can achieve when competing for the GPU. In other words we use that as a proxy for assessing user experience of using the desktop while there is simultaneous heavy GPU usage from another client.

The interactive client is set up to spend 1ms of GPU time in every 10ms period, resulting in an effective GPU load of 10%.

First test is with a heavy client wanting to utilise 75% of the GPU by submitting three 2.5ms jobs back to back, repeating that cycle every 10ms.

We can see that the average frame rate the interactive client achieves with the new scheduler is much higher than under the current FIFO algorithm.

For the second test we made the heavy GPU load client even more demanding by making it want to completely monopolise the GPU. It is now submitting four 50ms jobs back to back, and only backing off for 1us before repeating the loop.

Again the new scheduler is able to give significantly more GPU time to the interactive client compared to what FIFO is able to do.

From all the above it appears that the experiment was successful. We were able to simplify the code base, solve the priority starvation and improve scheduling fairness and GPU time allocation for interactive clients. No scheduling regressions have been identified to date.

The complete patch series implementing these changes is available at[11].

Because this work has simplified the scheduler code base and introduced entity GPU time tracking, it also opens up the possibilities for future experimenting with other modern algorithms. One example could be an EEVDF[12] inspired scheduler, given that algorithm has recently improved upon the kernel’s CPU scheduler and is looking potentially promising for it is combining fairness and latency in one algorithm.

Another interesting angle is that, as this work implements scheduling based on virtual GPU time, which as a reminder is calculated by scaling the real time by a factor based on entity priority, it can be tied really elegantly to the previously proposed DRM scheduling cgroup controller.

There we had group weights already which can now be used when scaling the virtual time and lead to a simple but effective cgroup controller. This has already been prototyped[13], but more on that in a following blog post.

https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/commit/?h=v6.16-rc2&id=08fb97de03aa2205c6791301bd83a095abc1949c ↩︎

https://registry.khronos.org/vulkan/specs/latest/man/html/VK_EXT_global_priority.html ↩︎

https://gitlab.freedesktop.org/drm/igt-gpu-tools/-/blob/master/tools/gputop.c?ref_type=heads ↩︎

https://gitlab.freedesktop.org/tursulin/kernel/-/commit/486bdcac6121cfc5356ab75641fc702e41324e27 ↩︎

https://gitlab.freedesktop.org/tursulin/kernel/-/commit/50898d37f652b1f26e9dac225ecd86b3215a4558 ↩︎

https://gitlab.freedesktop.org/tursulin/kernel/-/tree/drm-sched-cfs?ref_type=heads ↩︎

https://lore.kernel.org/dri-devel/20250502123256.50540-1-tvrtko.ursulin@igalia.com/ ↩︎

Hi all!

This month, two large patch series have been merged into wlroots! The first one is toplevel capture, which will allow tools such as grim and xdg-desktop-portal-wlr to capture the contents of a specific window. The wlroots side is super simple because wlroots just sends an event when a client requests to capture a toplevel. Producing frames for a particular toplevel from scratch would be pretty cumbersome to implement for a compositor, so wlroots also exposes a helper to create a capture source from an arbitrary scene-graph node. The compositor can pass the toplevel’s scene-graph node to this helper to implement toplevel capture. This is pretty flexible and leaves a lot of freedom to the compositor, making it easy to customize the capture result and to add support other kinds of capture targets! This also handles well popups (which need a composition step) and off-screen toplevels (which would otherwise stop rendering). The grim and xdg-desktop-portal-wlr side are not ready yet, but hopefully they shouldn’t be too much work. Still missing are cursors and a using a fully transparent background color (right now the background is black).

The other large patch series is color management support (part 1 was merged a while back, part 2, part 3 and part 4 just got merged). This was very challenging because one needs to learn a lot about colors before even understanding how color management should be implemented from a high-level architectural point-of-view. Sway isn’t quite ready yet, we’re missing one last piece of the puzzle to finish up the scene-graph integration. Thanks a lot to Kenny Levinsen, M. Stoeckl and Félix Poisot for going through this big pile of patches and spotting all of the bugs!

Sway 1.11 finally got released, with all of the wlroots 0.19 niceties. I’ve

also started the Wayland 1.24 release cycle, hopefully the final release can go

out very soon. Speaking of releases, I’ve cut libdrm 2.4.125 with updated

kernel headers, an upgraded CI and a GitLab repository rename (“drm” was very

confusing and got mixed up with the kernel side). Last, drm_info 2.8.0 adds

Apple and MediaTek format modifiers and support for the IN_FORMATS_ASYNC

property (contributed by Intel).

David Turner has contributed three optimization patches for libliftoff, with more in the pipeline. Leandro Ribeiro and Sebastian Wick have upstreamed libdisplay-info support for HDR10+ and Dolby Vision vendor-specific video blocks, with HDMI, HDMI Forum and HDMI Forum Sink Capability on the way (yes, these are all separate blocks!).

I’ve migrated the wayland-devel mailing list to a new server powered by Mailman 3 and public-inbox. The old Mailman 2 setup has started showing its age more than a decade ago, and it was about time we started a migration. I’ve started making plans for migrating other mailing lists, hopefully we’ll be able to decommission Mailman 2 in the coming months. Next we’ll need to migrate the postfix server over too, but one step at a time.

delthas has plumbed replies and reactions in Goguma’s compact mode. I’ve

taken some time to clean up soju’s docs: the Getting started page has been

revamped, the contrib/ directory has an index page, and man pages are

linkified on the website. Let me know if you have ideas to improve our docs

further!

As part of $dayjob I took part of Hackdays 2025, a hackathon organized by DINUM to work on La Suite (open-source productivity software). With my team, we worked on adding support for importing Notion documents into Docs. It was great meeting a lot of European open-source enthusiasts, and I hope our work can eventually be merged!

Phew, this status update ended up being larger than I expected! Perhaps thanks to getting the wlroots and Sway releases out of the way, and spending less time on triaging issues and investigating bugs. Perhaps thanks to a lot of stuff getting merged, after slowly accumulating and growing patches for months. Either way, see you next month for another status update!

This is, to some degree, a followup to this 2014 post. The TLDR of that is that, many a moon ago, the corporate overlords at Microsoft that decide all PC hardware behaviour decreed that the best way to handle an eraser emulation on a stylus is by having a button that is hardcoded in the firmware to, upon press, send a proximity out event for the pen followed by a proximity in event for the eraser tool. Upon release, they dogma'd, said eraser button shall virtually move the eraser out of proximity followed by the pen coming back into proximity. Or, in other words, the pen simulates being inverted to use the eraser, at the push of a button. Truly the future, back in the happy times of the mid 20-teens.

In a world where you don't want to update your software for a new hardware feature, this of course makes perfect sense. In a world where you write software to handle such hardware features, significantly less so.

Anyway, it is now 11 years later, the happy 2010s are over, and Benjamin and I have fixed this very issue in a few udev-hid-bpf programs but I wanted something that's a) more generic and b) configurable by the user. Somehow I am still convinced that disabling the eraser button at the udev-hid-bpf level will make users that use said button angry and, dear $deity, we can't have angry users, can we? So many angry people out there anyway, let's not add to that.

To get there, libinput's guts had to be changed. Previously libinput would read the kernel events, update the tablet state struct and then generate events based on various state changes. This of course works great when you e.g. get a button toggle, it doesn't work quite as great when your state change was one or two event frames ago (because prox-out of one tool, prox-in of another tool are at least 2 events). Extracing that older state change was like swapping the type of meatballs from an ikea meal after it's been served - doable in theory, but very messy.

Long story short, libinput now has a internal plugin system that can modify the evdev event stream as it comes in. It works like a pipeline, the events are passed from the kernel to the first plugin, modified, passed to the next plugin, etc. Eventually the last plugin is our actual tablet backend which will update tablet state, generate libinput events, and generally be grateful about having fewer quirks to worry about. With this architecture we can hold back the proximity events and filter them (if the eraser comes into proximity) or replay them (if the eraser does not come into proximity). The tablet backend is none the wiser, it either sees proximity events when those are valid or it sees a button event (depending on configuration).

This architecture approach is so successful that I have now switched a bunch of other internal features over to use that internal infrastructure (proximity timers, button debouncing, etc.). And of course it laid the ground work for the (presumably highly) anticipated Lua plugin support. Either way, happy times. For a bit. Because for those not needing the eraser feature, we've just increased your available tool button count by 100%[2] - now there's a headline for tech journalists that just blindly copy claims from blog posts.

[1] Since this is a bit wordy, the libinput API call is just libinput_tablet_tool_config_eraser_button_set_button()

[2] A very small number of styli have two buttons and an eraser button so those only get what, 50% increase? Anyway, that would make for a less clickbaity headline so let's handwave those away.

What do concern trolls and privileged people without visible or invisible disabilities who share or make content about accessibility on Linux being trash without contributing anything to projects have in common? They don’t actually really care about the group they’re defending; they just exploit these victims’ unfortunate situation to fuel hate against groups and projects actually trying to make the world a better place.

I never thought I’d be this upset to a point I’d be writing an article about something this sensitive with a clickbait-y title. It’s simultaneously demotivating, unproductive, and infuriating. I’m here writing this post fully knowing that I could have been working on accessibility in GNOME, but really, I’m so tired of having my mood ruined because of privileged people spending at most 5 minutes to write erroneous posts and then pretending to be oblivious when confronted while it takes us 5 months of unpaid work to get a quarter of recognition, let alone acknowledgment, without accounting for the time “wasted” addressing these accusations. This is far from the first time, and it will certainly not be the last.

I’m not mad. I’m absolutely furious and disappointed in the Linux Desktop community for being quiet in regards to any kind of celebration to advancing accessibility, while proceeding to share content and cheer for random privileged people from big-name websites or social media who have literally put a negative amount of effort into advancing accessibility on Linux. I’m explicitly stating a negative amount because they actually make it significantly more stressful for us.

None of this is fair. If you’re the kind of person who stays quiet when we celebrate huge accessibility milestones, yet shares (or even makes) content that trash talks the people directly or indirectly contributing to the fucking software you use for free, you are the reason why accessibility on Linux is shit.

No one in their right mind wants to volunteer in a toxic environment where their efforts are hardly recognized by the public and they are blamed for “not doing enough”, especially when they are expected to take in all kinds of harassment, nonconstructive criticism, and slander for a salary of 0$.

There’s only one thing I am shamefully confident about: I am not okay in the head. I shouldn’t be working on accessibility anymore. The recognition-to-smearing ratio is unbearably low and arguably unhealthy, but leaving people in unfortunate situations behind is also not in accordance with my values.

I’ve been putting so much effort, quite literally hundreds of hours, into:

Number 5 is especially important to me. I personally go as far as to refuse to contribute to projects under a permissive license, and/or that utilize a contributor license agreement, and/or that utilize anything riskily similar to these two, because I am of the opinion that no amount of code for accessibility should either be put under a paywall or be obscured and proprietary.

Permissive licenses make it painlessly easy for abusers to fork, build an ecosystem on top of it which may include accessibility-related improvements, slap a price tag alongside it, all without publishing any of these additions/changes. Corporations have been doing that for decades, and they’ll keep doing it until there’s heavy push back. The only time I would contribute to a project under a permissive license is when the tool is the accessibility infrastructure itself. Contributor license agreements are significantly worse in that regard, so I prefer to avoid them completely.

KDE hired a legally blind contractor to work on accessibility throughout the KDE ecosystem, including complying with the EU Directive to allow selling hardware with Plasma.

GNOME’s new executive director, Steven Deobald, is partially blind.

The GNOME Foundation has been investing a lot of money to improve accessibility on Linux, for example funding Newton, a Wayland accessibility project and AccessKit integration into GNOME technologies. Around 250,000€ (1/4) of the STF budget was spent solely on accessibility. And get this: literally everybody managing these contracts and communication with funders are volunteers; they’re ensuring people with disabilities earn a living, but aren’t receiving anything in return. These are the real heroes who deserve endless praise.

Do you want to know who we should be blaming? Profiteers who are profiting from the community’s effort while investing very little to nothing into accessibility.

This includes a significant portion of the companies sponsoring GNOME and even companies that employ developers to work on GNOME. These companies are the ones making hundreds of millions, if not billions, in net profit indirectly from GNOME (and other free and open-source projects), and investing little to nothing into them. However, the worst offenders are the companies actively using GNOME without ever donating anything to fund the projects.

Some companies actually do put an effort, like Red Hat and Igalia. Red Hat employs people with disabilities to work on accessibility in GNOME, one of which I actually rely on when making accessibility-related contributions in GNOME. Igalia funds Orca, the screen reader as part of GNOME, which is something the Linux community should be thankful of. However, companies have historically invested what’s necessary to comply with governments’ accessibility requirements, and then never invest in it again.

The privileged people who keep sharing and making content around accessibility on Linux being bad without contributing anything to it are, in my opinion, significantly worse than the companies profiting off of GNOME. Companies are and stay quiet, but these privileged people add an additional burden to contributors by either trash talking or sharing trash talkers. Once again, no volunteer deserves to be in the position of being shamed and ridiculed for “not doing enough”, since no one is entitled to their free time, but themselves.

Earlier in this article, I mentioned, and I quote: “I’ve been putting so much effort, quite literally hundreds of hours […]”. Let’s put an emphasis on “hundreds”. Here’s a list of most accessibility-related merge requests that have been incorporated into GNOME:

GNOME Calendar’s !559 addresses an issue where event widgets were unable to be focused and activated by the keyboard. That was present since the very beginning of GNOME Calendar’s existence, to be specific: for more than a decade. This alone was was a two-week effort. Despite it being less than 100 lines of code, nobody truly knew what to do to have them working properly before. This was followed up by !576, which made the event buttons usable in the month view with a keyboard, and then !587, which properly conveys the states of the widgets. Both combined are another two-week effort.

Then, at the time of writing this article, !564 adds 640 lines of code, which is something I’ve been volunteering on for more than a month, excluding the time before I opened the merge request.

Let’s do a little bit of math together with ‘only’ !559, !576, and !587. Just as a reminder: these three merge requests are a four-week effort in total, which I volunteered full-time—8 hours a day, or 160 hours a month. I compiled a small table that illustrates its worth:

| Country | Average Wage for Professionals Working on Digital AccessibilityWebAIM | Total in Local Currency (160 hours) | Exchange Rate | Total (CAD) |

|---|---|---|---|---|

| Canada | 58.71$ CAD/hour | 9,393.60$ CAD | N/A | 9,393.60$ |

| United Kingdom | 48.20£ GBP/hour | 7,712£ GBP | 1.8502 | 14,268.74$ |

| United States of America | 73.08$ USD/hour | 11,692.80$ USD | 1.3603 | 15,905.72$ |

To summarize the table: those three merge requests that I worked on for free were worth 9,393.60$ CAD (6,921.36$ USD) in total at a minimum.

Just a reminder:

Now just imagine how I feel when I’m told I’m “not doing enough”, either directly or indirectly, by privileged people who don’t rely on any of these accessibility features. Whenever anybody says we’re “not doing enough”, I feel very much included, and I will absolutely take it personally.

I fully expect everything I say in this article to be dismissed or be taken out of context on the basis of ad hominem, simply by the mere fact I’m a GNOME Foundation member / regular GNOME contributor. Either that, or be subject to whataboutism because another GNOME contributor made a comment that had nothing to do with mine but ‘is somewhat related to this topic and therefore should be pointed out just because it was maybe-probably-possibly-perhaps ableist’. I can’t speak for other regular contributors, but I presume that they don’t feel comfortable talking about this because they dared be a GNOME contributor. At least, that’s how I felt for the longest time.

Any content related to accessibility that doesn’t dunk on GNOME doesn’t foresee as many engagement, activity, and reaction as content that actively attacks GNOME, regardless of whether the criticism is fair. Many of these people don’t even use these accessibility features; they’re just looking for every opportunity to say “GNOME bad” and will 🪄 magically 🪄 start caring about accessibility.

Regular GNOME contributors like myself don’t always feel comfortable defending ourselves because dismissing GNOME developers just for being GNOME developers is apparently a trend…

Dear people with disabilities,

I won’t insist that we’re either your allies or your enemies—I have no right to claim that whatsoever.

I wasn’t looking for recognition. I wasn’t looking for acknowledgment since the very beginning either. I thought I would be perfectly capable of quietly improving accessibility in GNOME, but because of the overall community’s persistence to smear developers’ efforts without actually tackling the underlying issues within the stack, I think I’ve justified myself to at least demand for acknowledgment from the wider community.

I highly doubt it will happen anyway, because the Linux community feeds off of drama and trash talking instead of being productive, without realizing that it negatively demotivates active contributors while pushing away potential contributors. And worst of all: people with disabilities are the ones affected the most because they are misled into thinking that we don’t care.

It’s so unfair and infuriating that all the work I do and share online gain very little activity compared to random posts and articles from privileged people without disabilities that rant about the Linux desktop’s accessibility being trash. It doesn’t help that I become severely anxious sharing accessibility-related work to avoid signs of virtue signalling. The last thing I want is to (unintentionally) give any sign and impression of pretending to care about accessibility.

I beg you, please keep writing banger posts like fireborn’s I Want to Love Linux. It Doesn’t Love Me Back series and their interluding post. We need more people with disabilities to keep reminding developers that you exist and your conditions and disabilities are a spectrum, and not absolute.

We simultaneously need more interest from people with disabilities to contribute to free and open-source software, and the wider community to be significantly more intolerant of bullies who profit from smearing and demotivating people who are actively trying.

We should take inspiration from “Accessibility on Linux sucks, but GNOME and KDE are making progress” by OSNews. They acknowledge that accessibility on Linux is suboptimal while recognizing the efforts of GNOME and KDE. As a community, we should promote progress more often.

The All Systems Go! 2025 Call for Participation Closes Tomorrow!

The Call for Participation (CFP) for All Systems Go! 2025 will close tomorrow, on 13th of June! We’d like to invite you to submit your proposals for consideration to the CFP submission site quickly!

The Vulkan WG has released VK_KHR_video_decode_vp9. I did initial work on a Mesa extensions for this a good while back, and I've updated the radv code with help from AMD and Igalia to the final specification.

There is an open MR[1] for radv to add support for vp9 decoding on navi10+ with the latest firmware images in linux-firmware. It is currently passing all VK-GL-CTS tests for VP9 decode.

Adding this decode extension is a big milestone for me as I think it now covers all the reasons I originally got involved in Vulkan Video as signed off, there is still lots to do and I'll stay involved, but it's been great to see the contributions from others and how there is a bit of Vulkan Video community upstream in Mesa.

[1] https://gitlab.freedesktop.org/mesa/mesa/-/merge_requests/35398

As many of you have seen, I’ve been deleting a lot of code lately. There’s a reason for this, aside from it being a really great feeling to just obliterate some entire subsystem, and that reason is time.

There are 24 hours in a day. You sleep for 6. You work for 8. Spend an hour eating, and then you’re down to only 9 hours at the gym minus a few minutes to manage those pesky social and romantic obligations. That doesn’t leave a lot of time for mucking around in random codebases.

For example. Suppose I maintain a Gallium driver. This likely means I know my way around that driver, various related infrastructure, the GL state tracker, NIR, maybe enough GLSL to rubber stamp some MRs from @tarceri, and I know which channel on IRC in which to scream when my MRs get blocked by something that is definitely not me failing to test-compile the patches before merging them. Everything outside of these areas is out of scope for this hypothetical version of me, which means it may as well be a black box.

Now imagine I am all the maintainers of all the Gallium drivers. My collective scope has expanded. I am the master of all things src/gallium/drivers. I wave my hand and src/mesa obeys my whim. CI is always green, except when matters beyond the control of mere mortals conspire against me. I have a blog. News sites cover my MRs as though OpenGL is still relevant.

But there are still black boxes. Vulkan drivers, for example, are a mystery. CI is an artifact from a distant civilization which, though alien, ensures everything functions as I know it does. And then there are the esoteric parts of the tree in src/gallium/frontends. People I’ve never met may file bug reports against my drivers with tags for one of these components. Who is sexypixel420, what is a teflon, and why is that my problem?

A key aspect of any good Open Source project is maintenance. This is, relatively speaking, how well it is expected to function if Joe Randomguy installs and runs it. Maintenance of projects requires people to work on them and fix bugs. These are maintainers. When a project has a maintainer, we say that it is maintained. A project which does not have a maintainer is unmaintained. Simple enough.

Mesa is a project comprised of many subprojects. We call this an ecosystem. An ecosystem functions when all its projects work together in harmony towards a common goal, in this case blasting out those pixels into as many green triangles per second as possible.

What happens when a maintained project has an issue? Well, that’s when the maintainer steps in to fix it (assuming some other random contributor doesn’t, but we’re assuming a very low bus factor here). Tickets are filed, maintainers analyze and fix, and end users are happy because the software they randomly installed happens to work as they expect.

But what happens when a project with no maintainer has an issue? In short, nothing. That issue is filed away into the void, never to be resolved ever in a million years (unless some kind soul happens to pitch an unreviewed #TrustMeBuddy patch into the repo, but this is rare). These issues accumulate, and nobody even notices because nobody is subscribed to that label on the issue tracker. The project is derelict. If the project accumulates enough of these issues, distributions may even stop packaging it; packaging a defective piece of software creates downstream tickets for packagers, and much of the time they are not looking to drag their editor upstream and solve all the problems because they have more than enough problems already with packaging.

Now here’s where things start to get messy: what happens when an unmaintained subproject in an ecosystem has an issue? Some might be tempted to say this is the same as the above scenario, but it’s subtly different because the issue might not be directly user-facing. It might be “what happens in this codebase if I change this thing over here?” And if a codebase is unmaintained, then nobody knows what happens. The code can be read, but without a maintainer who possesses deep knowledge about the intent of the machinery, such shallow readings can only do so much.

Like trees with dead limbs, dead parts of Open Source projects must be periodically pruned to keep the rest of the project healthy. Having all these dead limbs around creates a larger surface area for the ecosystem, which creates the potential for unintended side effects (and bizarro bugs from unknown components) to manifest. It also has a hidden cost, which is burnout. When a maintainer must step outside their area in an attempt to triage something in a codebase that they do not know, instinctual fear and distaste of Other Code kicks in: this code is terrible because I didn’t write it. Also what the fuck is with this formatting? Is that a same-line brace with no space after the closing parens?! That’s it, I’m clocking it for today.

We’ve all been out in the jungle with some code that may as well be written in dirt. It sucks. And any time you’re stuck out in the dirt for more than a couple minutes, you want to be able to call in an expert to bail you out. Those experts are called maintainers. When you enter territory which is unmaintained, you’re effectively stranded unless you can cut your way out. If you can’t cut your way out, you’re stuck, and being stuck is frustrating, and being frustrated makes you not want to work on your thing anymore, which is how you end up losing maintainers. One of the ways, that is, because we’re all just one sarcastic winky-face away from a ranty ragequit mail.

Now is when I reveal that this long-winded, circuitous explanation is not actually about everyone’s favorite D3D9 state tracker (pour one out for a legend) or whatever the hell XA was. I’m talking about last week when I deleted legacy renderpass support from Zink. It’s been a long time coming, and realistically I should have done this sooner.

Like Mesa, Zink is an ecosystem supporting a wide variety of projects, but it’s also a single project with a single maintainer. A bug in RadeonSI code will not affect me, but a bug in Zink code affects me even if it is not code which has been tested or even used in the past 5 years. While it’s likely true that any code in Zink is code that I have written, there’s a big difference between code written in the past year and code written back in like 2020: in the former case I probably know what’s happening and why, and in the latter case it’s more likely that I’m confused how the code still exists.

Vulkan is a moving target. Every month brings changes and improvements, fun new extensions to misuse, and long-lost validation errors to tell us that nobody actually knows how to use SPIR-V. Over time, these new features and changes become more widely adopted, which makes them reliable, but historically Zink has been very lax in requiring “new” features.

There is this idea that Zink should be able to provide high-level OpenGL support to any device which provides any amount of conformant Vulkan support. It’s a neat idea: provide Vulkan 1.0, and you get GL 4.6 + ES 3.2 for free. There are, however, a number of issues with this pie-in-the-sky thinking:

tiler renderpass tracking + GPL + descriptor templates vs desktop rendering + shader objects + descriptor buffer, now tell me which one gets better perf on RADV–it’s the first one because Zink does not ever create linked/optimized shader objects).I’m not saying all this as a cry for help, though help is always appreciated, encouraged, and welcomed. This is a notice that I’m going to be pruning some old and unused codepaths to keep things manageable. Zink isn’t going to work on Vulkan 1.0; that goal is a nice idea but not achievable, especially when there is fierce competition like ANGLE gunning for every fraction of a perf percent they can get. I don’t foresee requiring any new extensions/features the day they ship, but I also don’t foresee keeping legacy fallbacks for codepaths which should be standard by now.

TL;DR: If you want Zink on old drivers/hardware, try Mesa 25.1. Everyone else, business as usual.

I had intended to be writing this post over a month ago, but [for reasons] I’m here writing it now.

Way back in March of ‘25, I was doing work that I could talk about publicly, and a sizable chunk of that was working to improve Gallium. The stopping point of that work was the colossal !34054, which roughly amounts to “remove a single * from a struct”. The result was rewriting every driver and frontend in the tree to varying extents:

` 260 files changed, 2179 insertions(+), 2331 deletions(-)`

So as I was saying, I expected to merge this right after the 25.1 branchpoint back around mid-April, which would have allowed me to keep my train of thought and momentum. Sadly this did not come to pass, and as a result I’ve forgotten most of the key points of that blog post (and related memes). But I still have this:

As readers of this blog, you’re all very smart. You can smell bullshit a country mile away. That’s why I’m going to treat you like the intelligent rhinoceroses you are and tell you right now that I no longer have any of the performance statistics I’d gathered for this post. We’re all gonna have to go on vibes and #TrustMeBuddy energy. I’ll begin by posing a hypothetical to you.

Suppose you’re running a complex application. Suppose this application has threads which share data. Now suppose you’re running this on an AMD CPU. What is your most immediate, significant performance concern?

If you said atomic operations, you are probably me from way back in February–Take that time machine and get back where you belong. The problems are not fixed.

AMD CPUs are bad with atomic operations. It’s a feature. No, I will not go into more detail; months have passed since I read all those dissertations, and I can’t remember what I ate for breakfast an hour ago. #TrustMeBuddy.

I know what you’re thinking. Mike, why aren’t you just pinning your threads?

Well, you incredibly handsome reader, the thing is thread pinning is a lie. You can pin threads by setting their affinity to keep them on the same CCX, and L3 cache, and blah blah blah, and even when you do that sometimes it has absolutely zero fucking effect and your fps is still 6. There is no explanation. PhDs who work on atomic operations in compilers cannot explain this. The dark lord Yog-Sothoth cowers in fear when pressed for details. Even tariffs on performance penalties cannot mitigate this issue.

In that sense, when you have your complex threadful application which uses atomic operations on an AMD CPU, and when you want to achieve the same performance it can have for free on a different type of CPU, you have four options:

Obviously none of these options are very appealing. If you have a complex application, you need threads, you need your AMD CPU with its bazillion cores, you need atomic operations, and, being realistic, the situation here with hardware/kernel/compiler is not going to improve before AI takes over my job and I quit to become a full-time novel writer in the budding rom-pixel-com genre.

While eliminating all atomic operations isn’t viable, eliminating a certain class of them is theoretically possible. I’m talking, of course, about reference counting, the means by which C developers LARP as Java developers.

In Mesa, nearly every object is reference counted, especially the ones which have no need for garbage collection. Haters will scream REWRITE IT IN RUST, but I’m not going to do that until someone finally rewrites the GLSL compiler in Rust to kick off the project. That’s right, I’m talking to all you rustaceans out there: do something useful for once instead of rewriting things that aren’t the best graphics stack on the planet.

A great example of this reference counting overreliance was sampler views, which I took a hatchet to some months ago. This is a context-specific object which has a clear ownership pattern. Why was it reference counted? Science cannot explain this, but psychologists will tell you that engineers will always follow existing development patterns without question regardless of how non-performant they may be. Don’t read any zink code to find examples. #TrustMeBuddy.

Sampler views were a relatively easy pickup, more like a proof of concept to see if the path was viable. Upon succeeding, I immediately rushed to the hardest possible task: the framebuffer. Framebuffer surfaces can be shared between contexts, which makes them extra annoying to solve in this case. For that reason, the solution was not to try a similar approach, it was to step back and analyze the usage and ownership pattern.

Originally the pipe_surface object was used solely for framebuffers, but this concept has since metastacized to clear operations and even video. It’s a useful object at a technical level: it provides a resource, format, miplevel, and layers. But does it really need to be an object?

Deeper analysis said no: the vast majority of drivers didn’t use this for anything special, and few drivers invested into architecture based on this being an actual object vs just having the state available. The majority of usage was pointlessly passing the object around because the caller handed it off to another function.

Of course, in the process of this analysis, I noted that zink was one of the heaviest investors into pipe_surface*. Pour one out for my past decision-making process. But I pulled myself up by my bootstraps, and I rewrote every driver and every frontend, and now whenever the framebuffer changes there are at least num_attachments * (frontend_thread + tc_thread + driver_thread) fewer atomic operations.

This saga is not over. There’s still base buffers and images to go, which is where a huge amount of performance is lost if you are hitting an affected codepath. Ideally those changes will be smaller and more concentrated than the framebuffer refactor.

Ideally I will find time for it.

#TrustMeBuddy.

The Linux 6.15 has just been released, bringing a lot of new features:

As always, I suggest to have a look at the Kernel Newbies summary. Now, let’s have a look at Igalia’s contributions.

In 3D graphics APIs such Vulkan and OpenGL, there are some mechanisms that applications can rely to check if the GPU had reset (you can read more about this in the kernel documentation). However, there was no generic mechanism to inform userspace that a GPU reset has happened. This is useful because in some cases the reset affected not only the app involved in the reset, but the whole graphic stack and thus needs some action to recover, like doing a module rebind or even bus reset to recovery the hardware. For this release, we helped to add an userspace event for this, so a daemon or the compositor can listen to it and trigger some recovery measure after the GPU has reset. Read more in the kernel docs.

In the DRM scheduler area, in preparation for the future scheduling improvements, we worked on cleaning up the code base, better separation of the internal and external interfaces, and adding formal interfaces at places where individual drivers had too much knowledge of the scheduler internals.

In the wider GPU stack area we optimised the most frequent dma-fence single fence merge operation to avoid memory allocations and array sorting. This should slightly reduce the CPU utilisation with workloads which use the DRM sync objects heavily, such as the modern composited desktops using Vulkan explicit sync.

Some releases ago, we helped to enable async page flips in the atomic DRM uAPI. So far, this feature was only enabled for the primary plane. In this release, we added a mechanism for the driver to decide which plane can perform async flips. We used this to enable overlay planes to do async flips in AMDGPU driver.

We also fixed a bug in the DRM fdinfo common layer which could cause use after free after driver unbind.

On the Intel GPU specific front we worked on adding better Alderlake-P support to the new Intel Xe driver by identifying and adding missing hardware workarounds, fixed the workaround application in general and also made some other smaller improvements.

When developing and optimizing a sched_ext-based scheduler, it is important to understand the interactions between the BPF scheduler and the in-kernel sched_ext core. If there is a mismatch between what the BPF scheduler developer expects and how the sched_ext core actually works, such a mismatch could often be the source of bugs or performance issues.

To address such a problem, we added a mechanism to count and report the internal events of the sched_ext core. This significantly improves the visibility of subtle edge cases, which might easily slip off. So far, eight events have been added, and the events can be monitored through a BPF program, sysfs, and a tracepoint.

As usual, as part of our work on diverse projects, we keep an eye on automated test results to look for potential security and stability issues in different kernel areas. We’re happy to have contributed to making this release a bit more robust by fixing bugs in memory management, network (SCTP), ext4, suspend/resume and other subsystems.

This is the complete list of Igalia’s contributions for this release:

v3d->cpu_jobFirst of all, what's outlined here should be available in libinput 1.29 but I'm not 100% certain on all the details yet so any feedback (in the libinput issue tracker) would be appreciated. Right now this is all still sitting in the libinput!1192 merge request. I'd specifically like to see some feedback from people familiar with Lua APIs. With this out of the way:

Come libinput 1.29, libinput will support plugins written in Lua. These plugins sit logically between the kernel and libinput and allow modifying the evdev device and its events before libinput gets to see them.

The motivation for this are a few unfixable issues - issues we knew how to fix but we cannot actually implement and/or ship the fixes without breaking other devices. One example for this is the inverted Logitech MX Master 3S horizontal wheel. libinput ships quirks for the USB/Bluetooth connection but not for the Bolt receiver. Unlike the Unifying Receiver the Bolt receiver doesn't give the kernel sufficient information to know which device is currently connected. Which means our quirks could only apply to the Bolt receiver (and thus any mouse connected to it) - that's a rather bad idea though, we'd break every other mouse using the same receiver. Another example is an issue with worn out mouse buttons - on that device the behavior was predictable enough but any heuristics would catch a lot of legitimate buttons. That's fine when you know your mouse is slightly broken and at least it works again. But it's not something we can ship as a general solution. There are plenty more examples like that - custom pointer deceleration, different disable-while-typing, etc.

libinput has quirks but they are internal API and subject to change without notice at any time. They're very definitely not for configuring a device and the local quirk file libinput parses is merely to bridge over the time until libinput ships the (hopefully upstreamed) quirk.

So the obvious solution is: let the users fix it themselves. And this is where the plugins come in. They are not full access into libinput, they are closer to a udev-hid-bpf in userspace. Logically they sit between the kernel event devices and libinput: input events are read from the kernel device, passed to the plugins, then passed to libinput. A plugin can look at and modify devices (add/remove buttons for example) and look at and modify the event stream as it comes from the kernel device. For this libinput changed internally to now process something called an "evdev frame" which is a struct that contains all struct input_events up to the terminating SYN_REPORT. This is the logical grouping of events anyway but so far we didn't explicitly carry those around as such. Now we do and we can pass them through to the plugin(s) to be modified.

The aforementioned Logitech MX master plugin would look like this: it registers itself with a version number, then sets a callback for the "new-evdev-device" notification and (where the device matches) we connect that device's "evdev-frame" notification to our actual code:

libinput:register(1) -- register plugin version 1

libinput:connect("new-evdev-device", function (_, device)

if device:vid() == 0x046D and device:pid() == 0xC548 then

device:connect("evdev-frame", function (_, frame)

for _, event in ipairs(frame.events) do

if event.type == evdev.EV_REL and

(event.code == evdev.REL_HWHEEL or

event.code == evdev.REL_HWHEEL_HI_RES) then

event.value = -event.value

end

end

return frame

end)

end

end)

This file can be dropped into /etc/libinput/plugins/10-mx-master.lua and will be loaded on context creation.

I'm hoping the approach using named signals (similar to e.g. GObject) makes it easy to add different calls in future versions. Plugins also have access to a timer so you can filter events and re-send them at a later point in time. This is useful for implementing something like disable-while-typing based on certain conditions.

So why Lua? Because it's very easy to sandbox. I very explicitly did not want the plugins to be a side-channel to get into the internals of libinput - specifically no IO access to anything. This ruled out using C (or anything that's a .so file, really) because those would run a) in the address space of the compositor and b) be unrestricted in what they can do. Lua solves this easily. And, as a nice side-effect, it's also very easy to write plugins in.[1]

Whether plugins are loaded or not will depend on the compositor: an explicit call to set up the paths to load from and to actually load the plugins is required. No run-time plugin changes at this point either, they're loaded on libinput context creation and that's it. Otherwise, all the usual implementation details apply: files are sorted and if there are files with identical names the one from the highest-precedence directory will be used. Plugins that are buggy will be unloaded immediately.

If all this sounds interesting, please have a try and report back any APIs that are broken, or missing, or generally ideas of the good or bad persuation. Ideally before we ship it and the API is stable forever :)

[1] Benjamin Tissoires actually had a go at WASM plugins (via rust). But ... a lot of effort for rather small gains over Lua

This week, I reviewed the last available version of the Linux KMS Color API. Specifically, I explored the proposed API by Harry Wentland and Alex Hung (AMD), their implementation for the AMD display driver and tracked the parallel efforts of Uma Shankar and Chaitanya Kumar Borah (Intel) in bringing this plane color management to life. With this API in place, compositors will be able to provide better HDR support and advanced color management for Linux users.

To get a hands-on feel for the API’s potential, I developed a fork of

drm_info compatible with the new color properties. This allowed me to

visualize the display hardware color management capabilities being exposed. If

you’re curious and want to peek behind the curtain, you can find my exploratory

work on the

drm_info/kms_color branch.

The README there will guide you through the simple compilation and installation

process.

Note: You will need to update libdrm to match the proposed API. You can find

an updated version in my personal repository

here. To avoid

potential conflicts with your official libdrm installation, you can compile

and install it in a local directory. Then, use the following command: export

LD_LIBRARY_PATH="/usr/local/lib/"

In this post, I invite you to familiarize yourself with the new API that is

about to be released. You can start doing as I did below: just deploy a custom

kernel with the necessary patches and visualize the interface with the help of

drm_info. Or, better yet, if you are a userspace developer, you can start

developing user cases by experimenting with it.

The more eyes the better.

The great news is that AMD’s driver implementation for plane color operations is being developed right alongside their Linux KMS Color API proposal, so it’s easy to apply to your kernel branch and check it out. You can find details of their progress in the AMD’s series.

I just needed to compile a custom kernel with this series applied,

intentionally leaving out the AMD_PRIVATE_COLOR flag. The

AMD_PRIVATE_COLOR flag guards driver-specific color plane properties, which

experimentally expose hardware capabilities while we don’t have the generic KMS

plane color management interface available.

If you don’t know or don’t remember the details of AMD driver specific color properties, you can learn more about this work in my blog posts [1] [2] [3]. As driver-specific color properties and KMS colorops are redundant, the driver only advertises one of them, as you can see in AMD workaround patch 24.

So, with the custom kernel image ready, I installed it on a system powered by AMD DCN3 hardware (i.e. my Steam Deck). Using my custom drm_info, I could clearly see the Plane Color Pipeline with eight color operations as below:

└───"COLOR_PIPELINE" (atomic): enum {Bypass, Color Pipeline 258} = Bypass

├───Bypass

└───Color Pipeline 258

├───Color Operation 258

│ ├───"TYPE" (immutable): enum {1D Curve, 1D LUT, 3x4 Matrix, Multiplier, 3D LUT} = 1D Curve

│ ├───"BYPASS" (atomic): range [0, 1] = 1

│ └───"CURVE_1D_TYPE" (atomic): enum {sRGB EOTF, PQ 125 EOTF, BT.2020 Inverse OETF} = sRGB EOTF

├───Color Operation 263

│ ├───"TYPE" (immutable): enum {1D Curve, 1D LUT, 3x4 Matrix, Multiplier, 3D LUT} = Multiplier

│ ├───"BYPASS" (atomic): range [0, 1] = 1

│ └───"MULTIPLIER" (atomic): range [0, UINT64_MAX] = 0

├───Color Operation 268

│ ├───"TYPE" (immutable): enum {1D Curve, 1D LUT, 3x4 Matrix, Multiplier, 3D LUT} = 3x4 Matrix

│ ├───"BYPASS" (atomic): range [0, 1] = 1

│ └───"DATA" (atomic): blob = 0

├───Color Operation 273

│ ├───"TYPE" (immutable): enum {1D Curve, 1D LUT, 3x4 Matrix, Multiplier, 3D LUT} = 1D Curve

│ ├───"BYPASS" (atomic): range [0, 1] = 1

│ └───"CURVE_1D_TYPE" (atomic): enum {sRGB Inverse EOTF, PQ 125 Inverse EOTF, BT.2020 OETF} = sRGB Inverse EOTF

├───Color Operation 278

│ ├───"TYPE" (immutable): enum {1D Curve, 1D LUT, 3x4 Matrix, Multiplier, 3D LUT} = 1D LUT

│ ├───"BYPASS" (atomic): range [0, 1] = 1

│ ├───"SIZE" (atomic, immutable): range [0, UINT32_MAX] = 4096

│ ├───"LUT1D_INTERPOLATION" (immutable): enum {Linear} = Linear

│ └───"DATA" (atomic): blob = 0

├───Color Operation 285

│ ├───"TYPE" (immutable): enum {1D Curve, 1D LUT, 3x4 Matrix, Multiplier, 3D LUT} = 3D LUT

│ ├───"BYPASS" (atomic): range [0, 1] = 1

│ ├───"SIZE" (atomic, immutable): range [0, UINT32_MAX] = 17

│ ├───"LUT3D_INTERPOLATION" (immutable): enum {Tetrahedral} = Tetrahedral

│ └───"DATA" (atomic): blob = 0

├───Color Operation 292

│ ├───"TYPE" (immutable): enum {1D Curve, 1D LUT, 3x4 Matrix, Multiplier, 3D LUT} = 1D Curve

│ ├───"BYPASS" (atomic): range [0, 1] = 1

│ └───"CURVE_1D_TYPE" (atomic): enum {sRGB EOTF, PQ 125 EOTF, BT.2020 Inverse OETF} = sRGB EOTF

└───Color Operation 297

├───"TYPE" (immutable): enum {1D Curve, 1D LUT, 3x4 Matrix, Multiplier, 3D LUT} = 1D LUT

├───"BYPASS" (atomic): range [0, 1] = 1

├───"SIZE" (atomic, immutable): range [0, UINT32_MAX] = 4096

├───"LUT1D_INTERPOLATION" (immutable): enum {Linear} = Linear

└───"DATA" (atomic): blob = 0

Note that Gamescope is currently using

AMD driver-specific color properties

implemented by me, Autumn Ashton and Harry Wentland. It doesn’t use this KMS

Color API, and therefore COLOR_PIPELINE is set to Bypass. Once the API is

accepted upstream, all users of the driver-specific API (including Gamescope)

should switch to the KMS generic API, as this will be the official plane color

management interface of the Linux kernel.

On the Intel side, the driver implementation available upstream was built upon an earlier iteration of the API. This meant I had to apply a few tweaks to bring it in line with the latest specifications. You can explore their latest work here. For a more simplified handling, combining the V9 of the Linux Color API, Intel’s contributions, and my necessary adjustments, check out my dedicated branch.

I then compiled a kernel from this integrated branch and deployed it on a system featuring Intel TigerLake GT2 graphics. Running my custom drm_info revealed a Plane Color Pipeline with three color operations as follows:

├───"COLOR_PIPELINE" (atomic): enum {Bypass, Color Pipeline 480} = Bypass

│ ├───Bypass

│ └───Color Pipeline 480

│ ├───Color Operation 480

│ │ ├───"TYPE" (immutable): enum {1D Curve, 1D LUT, 3x4 Matrix, 1D LUT Mult Seg, 3x3 Matrix, Multiplier, 3D LUT} = 1D LUT Mult Seg

│ │ ├───"BYPASS" (atomic): range [0, 1] = 1

│ │ ├───"HW_CAPS" (atomic, immutable): blob = 484

│ │ └───"DATA" (atomic): blob = 0

│ ├───Color Operation 487

│ │ ├───"TYPE" (immutable): enum {1D Curve, 1D LUT, 3x4 Matrix, 1D LUT Mult Seg, 3x3 Matrix, Multiplier, 3D LUT} = 3x3 Matrix

│ │ ├───"BYPASS" (atomic): range [0, 1] = 1

│ │ └───"DATA" (atomic): blob = 0

│ └───Color Operation 492

│ ├───"TYPE" (immutable): enum {1D Curve, 1D LUT, 3x4 Matrix, 1D LUT Mult Seg, 3x3 Matrix, Multiplier, 3D LUT} = 1D LUT Mult Seg

│ ├───"BYPASS" (atomic): range [0, 1] = 1

│ ├───"HW_CAPS" (atomic, immutable): blob = 496

│ └───"DATA" (atomic): blob = 0

Observe that Intel’s approach introduces additional properties like “HW_CAPS” at the color operation level, along with two new color operation types: 1D LUT with Multiple Segments and 3x3 Matrix. It’s important to remember that this implementation is based on an earlier stage of the KMS Color API and is awaiting review.

I’m impressed by the solid implementation and clear direction of the V9 of the KMS Color API. It aligns with the many insightful discussions we’ve had over the past years. A huge thank you to Harry Wentland and Alex Hung for their dedication in bringing this to fruition!

Beyond their efforts, I deeply appreciate Uma and Chaitanya’s commitment to updating Intel’s driver implementation to align with the freshest version of the KMS Color API. The collaborative spirit of the AMD and Intel developers in sharing their color pipeline work upstream is invaluable. We’re now gaining a much clearer picture of the color capabilities embedded in modern display hardware, all thanks to their hard work, comprehensive documentation, and engaging discussions.

Finally, thanks all the userspace developers, color science experts, and kernel developers from various vendors who actively participate in the upstream discussions, meetings, workshops, each iteration of this API and the crucial code review process. I’m happy to be part of the final stages of this long kernel journey, but I know that when it comes to colors, one step is completed for new challenges to be unlocked.

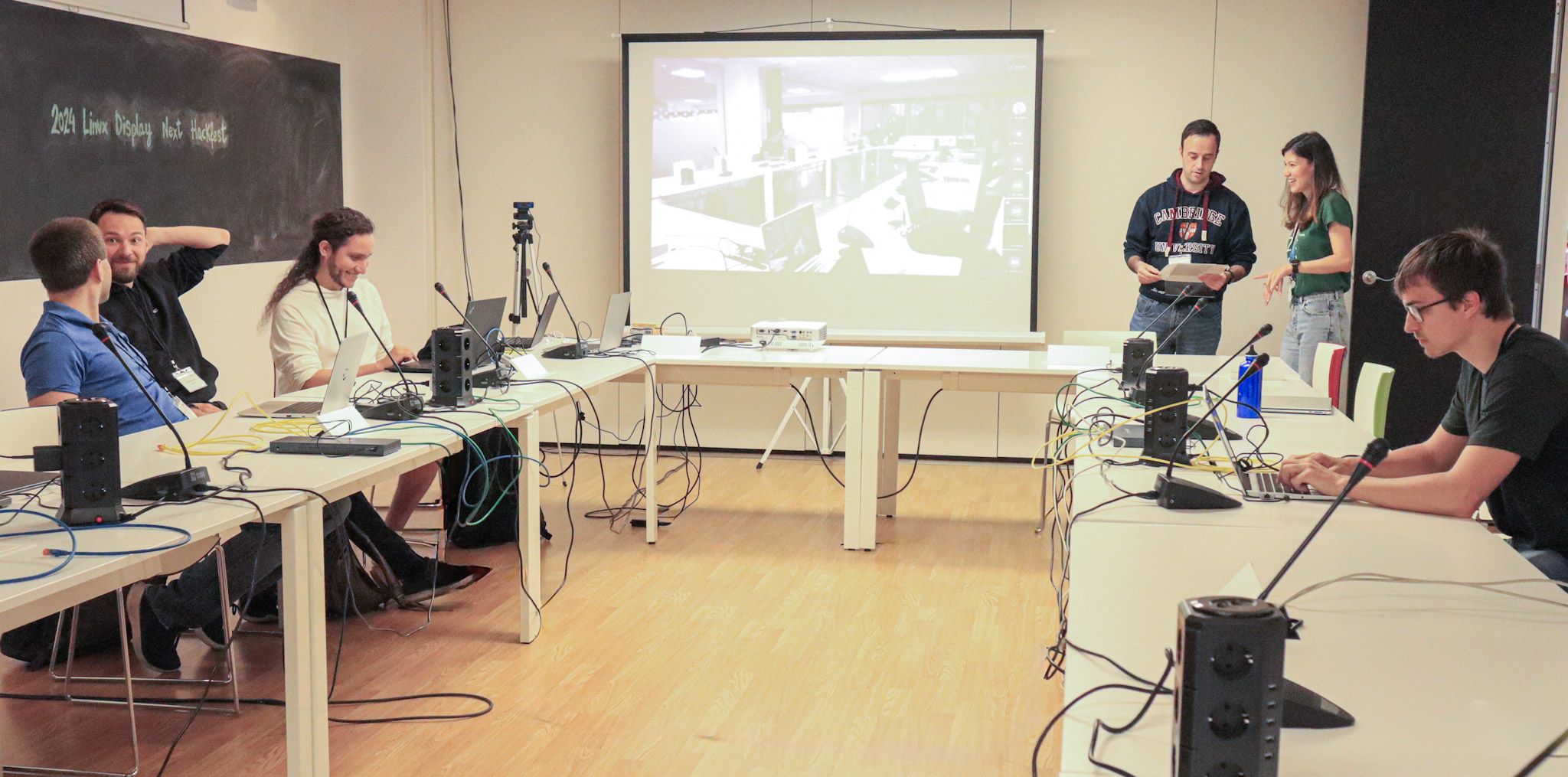

Looking forward to meeting you in this year Linux Display Next hackfest, organized by AMD in Toronto, to further discuss HDR, advanced color management, and other display trends.

Hi!

Today wlroots 0.19.0 has finally been released! Among the newly supported protocols, color-management-v1 lays the first stone of HDR support (backend and renderer bits are still being reviewed) and ext-image-copy-capture-v1 enhances the previous screen capture protocol with better performance. Explicit synchronization is now fully supported, and display-only devices such as gud or DisplayLink can now be used with wlroots. See the release notes for more details! I hope I’ll be able to go back to some feature work and reviews now that the release is out of the way.

In other graphics news, I’ve finished my review of the core DRM patches for the new KMS color pipeline. Other kernel folks have reviewed the patches, we’re just waiting on a user-space implementation now (which various compositor folks are working on). I’ve started a discussion about libliftoff support.

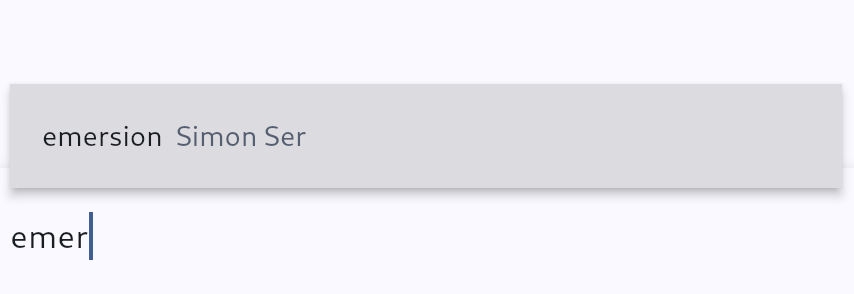

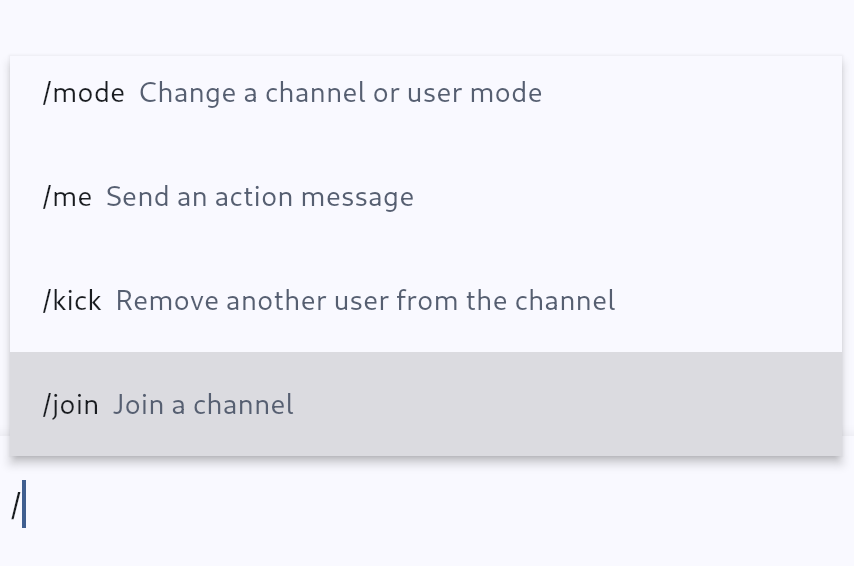

In addition to wlroots, this month I’ve also released a new version of my mobile IRC client, Goguma 0.8.0. delthas has sent a patch to synchronize pinned and muted conversations across devices via soju. Thanks to pounce, Goguma now supports message reactions (not included in the release):

My extended-isupport IRCv3 specification has been accepted. It allows servers to advertise metadata such as the maximum nickname length or IRC network name early (before the user provides a nickname and authentication details), which is useful for building nice connection UIs. I’ve posted another proposal for IRC network icons.

go-smtp 0.22.0 has been released with an improved DATA command API, RRVS

support (Require Recipient Valid Since), and custom hello after reset or

STARTTLS. I’ve also spent quite a bit of time reaching out to companies for

XDC 2025 sponsorships.

See you next month!

It has been almost a year since my last update on the Rockchip NPU, and though I'm a bit sad that I haven't had more time to work on it, I'm happy that I found some time earlier this year for this.

Quoting from my last update on the Rockchip NPU driver:

The kernel driver is able to fully use the three cores in the NPU, giving us the possibility of running 4 simultaneous object detection inferences such as the one below on a stream, at almost 30 frames per second.

All feedback has been incorporated in a new revision of the kernel driver and it was submitted to the Linux kernel mailing list.

Though I'm very happy with the direction the kernel driver is taking, I would have liked to make faster progress on it. I have spent the time since the first revision on making the Etnaviv NPU driver ready to be deployed in production (will be blogging about this soon), and also had to take some non-upstream work to pay my bills.

Next I plan to cleanup the userspace driver so it's ready for review, and then I will go for a third revision of the kernel driver.

2025 was my first year at FOSDEM, and I can say it was an incredible experience where I met many colleagues from Igalia who live around the world, and also many friends from the Linux display stack who are part of my daily work and contributions to DRM/KMS. In addition, I met new faces and recognized others with whom I had interacted on some online forums and we had good and long conversations.

During FOSDEM 2025 I had the opportunity to present about kworkflow in the kernel devroom. Kworkflow is a set of tools that help kernel developers with their routine tasks and it is the tool I use for my development tasks. In short, every contribution I make to the Linux kernel is assisted by kworkflow.

The goal of my presentation was to spread the word about kworkflow. I aimed to show how the suite consolidates good practices and recommendations of the kernel workflow in short commands. These commands are easily configurable and memorized for your current work setup, or for your multiple setups.

For me, Kworkflow is a tool that accommodates the needs of different agents in the Linux kernel community. Active developers and maintainers are the main target audience for kworkflow, but it is also inviting for users and user-space developers who just want to report a problem and validate a solution without needing to know every detail of the kernel development workflow.

Something I didn’t emphasize during the presentation but would like to correct this flaw here is that the main author and developer of kworkflow is my colleague at Igalia, Rodrigo Siqueira. Being honest, my contributions are mostly on requesting and validating new features, fixing bugs, and sharing scripts to increase feature coverage.

So, the video and slide deck of my FOSDEM presentation are available for download here.

And, as usual, you will find in this blog post the script of this presentation and more detailed explanation of the demo presented there.

Hi, I’m Melissa, a GPU kernel driver developer at Igalia and today I’ll be giving a very inclusive talk to not let your motivation go by saving time with kworkflow.

So, you’re a kernel developer, or you want to be a kernel developer, or you don’t want to be a kernel developer. But you’re all united by a single need: you need to validate a custom kernel with just one change, and you need to verify that it fixes or improves something in the kernel.

And that’s a given change for a given distribution, or for a given device, or for a given subsystem…

Look to this diagram and try to figure out the number of subsystems and related work trees you can handle in the kernel.

So, whether you are a kernel developer or not, at some point you may come across this type of situation:

There is a userspace developer who wants to report a kernel issue and says:

There is a userspace developer who wants to report a kernel issue and says:

But the userspace developer has never compiled and installed a custom kernel before. So they have to read a lot of tutorials and kernel documentation to create a kernel compilation and deployment script. Finally, the reporter managed to compile and deploy a custom kernel and reports:

And then, the kernel developer needs to reproduce this issue on their side, but they have never worked with this distribution, so they just created a new script, but the same script created by the reporter.

What’s the problem of this situation? The problem is that you keep creating new scripts!

Every time you change distribution, change architecture, change hardware, change project - even in the same company - the development setup may change when you switch to a different project, you create another script for your new kernel development workflow!